What if I told you that the smartest enemies in your favorite games are actually a bunch of “if” statements in a trench coat? That the reason an NPC can act scared, brave, or suspicious isn’t because it’s sentient – it’s because someone told it exactly how to fake it? Today, we’re diving deep into the magic trick behind how video game AI works – how it’s changed over time, what problems it still faces, which games actually get it right, and whether the rise of machine learning will finally change the game.

Video Game AI Over Time

Let’s be clear right off the bat – video game AI is not the same thing as the AI you see in sci-fi movies or AI labs. The “intelligence” in game AI is all about illusion. It’s about crafting a behavior that feels believable, even if it’s just smoke and mirrors.

In the early days of gaming – think Pong, Pac-Man, and the original Doom – AI wasn’t much more than hardcoded reactions. If the player is here, do this. If not, do that. These were basic condition checks – “if this, then that” logic – and they worked because the games themselves were simpler.

But over time, players expected more. Enemies couldn’t just stand in place or follow you like a Roomba on wheels. Developers began layering more complex behavior trees, state machines, and priority systems to simulate what we’d consider intelligence.

By the time we hit the era of games like F.E.A.R. in 2005, the AI started to look – and feel – genuinely smart. That game used something called Goal-Oriented Action Planning (GOAP), which allowed AI agents to evaluate multiple options and pick the best course of action dynamically. Enemies in F.E.A.R. didn’t just charge at you – they’d flank you, use cover, and retreat if overwhelmed. They weren’t thinking, but they were convincingly pretending.

Still, let’s not kid ourselves. Even in modern titles, most game AI is about scripting and deception. It doesn’t learn, it doesn’t grow – it just runs the playbook someone wrote for it.

How Video Game AI Works

So what’s really going on under the hood when an enemy spots you, takes cover, flanks, and shouts for backup? How does a bunch of code simulate a decision like that? The answer is: it’s both simpler and more complicated than you’d think.

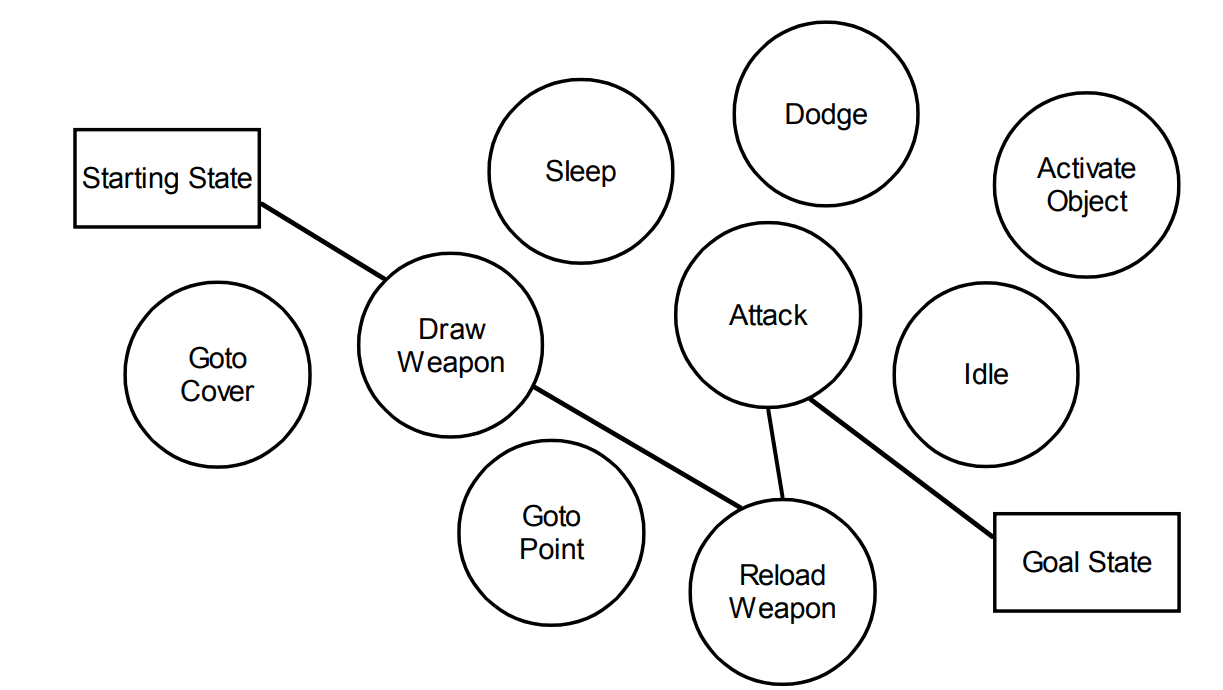

At its core, video game AI is a system of logic structures. The most basic of these is the Finite State Machine, or FSM. Picture a flowchart with states like “Idle,” “Patrolling,” “Chasing,” “Attacking,” and “Retreating.” The AI is in one state at a time, and transitions between them based on conditions. For example: “If player is spotted, switch from Patrolling to Chasing.” It’s clean, it’s fast, and it’s been the backbone of game AI for decades.

More complex games layer additional systems on top. Behavior Trees are another popular model – they’re hierarchical structures where the AI evaluates conditions top-down and executes tasks accordingly. They’re great for organizing logic in a readable, modular way. A behavior tree might say: “First, check if player is visible. If yes, choose an attack. If no, investigate last known location.”

Then there’s Utility AI. This isn’t a tree – it’s a scoring system. Every possible action the AI could take gets a score based on the current game state. The AI picks the action with the highest score. So instead of following a strict script, it adapts based on the numbers. Low health? Score for retreating goes up. Enemy exposed? Score for attacking goes up. It allows for more flexible and reactive behavior.

Goal-Oriented Action Planning takes that further. GOAP doesn’t just pick a single action – it picks a sequence of actions to achieve a goal. It’s like mini-strategy planning inside the AI’s head. An enemy might decide it needs to “attack player,” and it’ll dynamically plan the steps needed to reach that state – find a weapon, approach the target, avoid hazards, then engage. This kind of planning creates emergent behavior – but it also adds complexity. We will go into more detail later.

Now, how complex is all this to code? Very. Game AI is not plug-and-play. You’re not just writing logic – you’re crafting systems that have to run every frame with high performance, in constantly shifting environments. An AI designer doesn’t just write code and walk away. They debug behaviors, tune values, simulate thousands of edge cases, and constantly test how the AI interacts with level geometry, player behavior, and other systems.

It’s not just the AI logic either. You need animation triggers, audio cues, pathfinding systems, navmesh data, line-of-sight calculations, combat routines, reaction timers, state memory, environmental awareness – the list grows fast.

So how much of a game’s development actually goes to AI?

It depends on the type of game, but here’s a rough breakdown: in a typical big-budget single-player action game, AI can account for 15 to 30% of the development effort when it’s a core gameplay element. That’s not just coding – it includes AI design, scripting, tuning, level-specific behavior logic, and QA testing.

In strategy games or stealth games – where AI is the game – it can go up to 40% or more. In racing or sports games, AI often determines how fun the whole thing feels, so the tuning and design effort is immense. On the flip side, in multiplayer-only shooters or puzzle games, AI might take a backseat or be minimal.

And remember – unlike graphics or sound, AI is invisible. You only notice it when it fails. That makes debugging incredibly hard. If an enemy walks into a wall, you have to ask: was it a bad decision? A pathfinding error? A collision bug? An animation glitch? AI problems are often problems with everything else too.

That’s why AI development is one of the most complex, thankless, and yet crucial parts of making a video game. It’s the invisible hand guiding immersion. If the AI breaks, the illusion shatters. If it works, you never even think about it.

It’s the ghost in the machine – and writing that ghost is some of the hardest work in game development.

Understanding GOAP: Goal-Oriented Action Planning

Let’s zoom in on one of the more advanced AI systems used in video games: Goal-Oriented Action Planning, or GOAP. This system isn’t just about picking a reaction – it’s about planning ahead, moment by moment, like a strategist with a mission board.

GOAP works by setting a desired outcome – let’s say, “attack the player” – and then figuring out which actions, in what sequence, will get the AI from its current state to that goal. The system looks at what it can do and simulates chains of actions to see which ones can accomplish the objective.

So instead of coding, “If you see the player, do X,” developers define what goals are available and what actions lead to them. The AI itself decides how to get there based on the current situation.

This approach exploded into the mainstream with the previously mentioned game Fear., a 2005 first-person shooter that stunned players with enemies that weren’t just reacting – they were adapting. Flanking, suppressing, falling back – they didn’t just feel smart, they looked smart. Behind the curtain? GOAP was evaluating options like “find cover,” “engage,” or “advance” based on player position, health, and tactical advantage.

The beauty of GOAP is that it’s modular. You can define new actions and goals, and the system dynamically recombines them. That flexibility allows emergent behaviors – stuff the designers didn’t even fully script. That’s why it feels like “the AI is thinking,” even though it’s just searching for a valid plan.

But GOAP comes at a cost. Every plan the AI considers has to be evaluated quickly, which limits how deep or complex the decision trees can be. Plans that take more than a few steps can slow down performance or become invalid mid-action if the game state changes. So while GOAP adds depth, it also needs a careful leash to stay efficient.

Utility AI: Scoring Behavior Like a Game of Darts

Where GOAP is all about planning, Utility AI is about weighing options. It treats decisions like a ranking contest. Every potential action gets a score based on how valuable or useful it is in the current context, and the AI picks the highest-scoring option.

Imagine an enemy deciding between “run away,” “reload,” or “shoot.” Each action gets a utility score. If the player is close, health is low, and ammo is gone, “run away” might score the highest. But if they’ve got full health and a clean shot, “shoot” wins.

These scores aren’t just static – they’re often dynamic curves based on variables like distance, health, visibility, cover, and more. Developers use math functions to define how the score ramps up or down as those conditions change.

This makes Utility AI great for creating reactive behavior. Enemies don’t just follow a script – they weigh their surroundings and pick what makes the most sense. It’s fast, flexible, and easy to tweak without rewriting the logic.

Games like The Sims use a version of Utility AI for simulating daily routines – eating, sleeping, socializing – based on internal needs. FPS games like Killzone 2 and Halo often use utility systems to make enemies prioritize cover, regroup, or push when they sense weakness.

The downside? You lose long-term planning. Utility AI is greedy – it picks the best move right now, not over time. That’s where it can fall short compared to GOAP. But for fast-paced or sandbox-style games, utility-based systems strike a strong balance between flexibility and performance.

How AI Cheats in Your Favorite Strategy Games

Alright, let’s talk about the dirty little secret of strategy game AI: it cheats. A lot.

And not in a “wow, it’s really smart” kind of way. I mean literally – it breaks the rules to keep things interesting.

Why? Because strategy games like Civilization, Total War, and Age of Empires are brutally complex. The number of possible states in a late-game map is beyond human comprehension. It’s not just cities and armies – it’s diplomacy, tech trees, terrain advantages, unit counters, supply lines, and fog of war.

If the AI played by the exact same rules you do, it would lose. Fast. Or worse – it would stall out, take too long to think, and kill the pacing.

So developers give it “advantages.” Maybe it sees through the fog of war. Maybe it gets bonus resources. Maybe it knows your build order even when it shouldn’t. These are often called “rubberbanding” techniques – artificial buffs to keep the AI competitive with a skilled player.

Sometimes the AI doesn’t even make decisions – it just fakes them. It might choose a scripted path to look “strategic,” but it’s just following an invisible track. Or it spawns reinforcements out of nowhere because the pacing demands it. Or it perfectly counters your moves even if it technically shouldn’t have the intel.

And here’s the twist: these cheats aren’t lazy – they’re necessary. Because strategy games are about fun, not fairness. You don’t want an AI that plays like a spreadsheet – you want one that feels like a cunning rival. Cheating is part of that illusion.

XCOM famously cheats with its accuracy percentages, subtly nudging results to make near-misses more dramatic. StarCraft AIs in campaign missions often have unlimited APM and resource boosts. Even Total War’s most aggressive armies often get morale bonuses or unit buffs behind the scenes.

So next time you get outmaneuvered by an AI opponent and think, “No way it should’ve known that”? You’re probably right. But that’s part of the design. The game’s not trying to be fair. It’s trying to make you feel like you’re playing against something clever – and that illusion is worth bending the rules.

What Are the Problems of Video Game AI?

Here’s the kicker – despite decades of advances, video game AI still faces major limitations. And most of them boil down to one thing: performance.

When you’re developing a game, especially a AAA title, you’re working with finite resources. CPU time is a precious commodity. It has to juggle physics, rendering, player input, sound, network data, and yeah – AI. You can’t afford to spend even half a second “thinking” about what an enemy should do. It has to be instant, every frame, without dragging the whole game down.

That’s why developers use systems like Finite State Machines (FSMs) and Behavior Trees. These structures are fast, predictable, and easy to debug. You can see exactly why an enemy made a decision. But the trade-off? They don’t allow for a ton of complexity. You might want your AI to react like a human – hesitating, adapting, bluffing – but in practice, most of that gets cut down for performance reasons.

Then there’s the issue of the state space – the massive collection of all possible configurations in a game. A simple board game might have a few hundred states. A modern 3D game? We’re talking millions or even billions. Strategy games like StarCraft? The number of possible states is beyond astronomical. Developers can’t afford to simulate or search that space in real time. So they use shortcuts – hardcoded reactions, reduced observability, and even outright cheating.

Yes – AI in games cheats all the time. It might have access to information it shouldn’t. It might magically “sense” where the player is. In horror games, it might teleport behind you just to create tension. And in strategy games, it might get free resources or know your troop movements to keep things competitive.

And that’s another problem: making the AI fun. An enemy that plays perfectly isn’t fun – it’s a wall. What you want is AI that makes believable mistakes. That sometimes falls for your trick. That reacts in ways you can read and exploit. In other words, AI that’s beatable, but not dumb.

Games With Good AI

So what does good AI look like? There are a few games that stand out – even years after their release.

F.E.A.R., as mentioned earlier, is legendary for its combat AI. Enemies flank, cover each other, and retreat when necessary – all based on dynamic goal evaluation.

The Halo series also gets a lot of praise. The Covenant enemies aren’t just bullet sponges – they react to your tactics. Grunts flee when leaders die. Elites dodge grenades and try to outmaneuver you. There’s a layered hierarchy to their behavior that makes every encounter feel dynamic.

Then there’s The Last of Us Part II, where the AI systems are deeply tied to emotional storytelling. Enemies call out each other’s names. They search in squads. They adapt their patrols based on what they’ve seen you do. It’s not just about shooting – it’s about making the world feel alive and responsive.

And don’t sleep on older examples like Alien: Isolation, where the xenomorph’s AI is designed not just to hunt you, but to toy with you. It’s actually a pair of AI systems – one that tracks your location, and one that controls the alien’s behavior. The result? It feels like it’s learning, even though it’s really just playing out a scripted illusion.

Will AI in Games Benefit from the AI Boom Today?

With the rise of machine learning, generative models, and large language models, you might be wondering – can this revolution finally bring real AI to games?

The answer is… complicated.

In theory, yes. We’ve already seen bots like DeepMind’s AlphaStar and OpenAI Five train to beat human pros in StarCraft II and Dota 2. These AIs weren’t scripted – they learned by playing millions of games, watching human data, and optimizing strategies. But here’s the catch: these systems were trained outside the game using massive compute resources. Once trained, they fed inputs into the game like a human player would. They weren’t part of the game engine.

In actual games, integrating that kind of AI is much harder. Machine learning requires tons of data and a stable environment. But video games change constantly during development – new mechanics, new levels, new rules. Every tweak breaks the model, forcing developers to retrain the AI from scratch. That’s not sustainable.

And even if it was, ML models are huge. You can’t just drop one into a console game and expect it to run smoothly. They need servers, constant updates, and tons of memory. That’s not how games are built – especially ones that need to run offline.

So instead, machine learning is being used more behind the scenes. It helps generate content. It balances difficulty. It assists with animation and asset creation. But for now, it’s not powering your enemy NPCs. That job still goes to the good ol’ fashioned FSMs, behavior trees, and cleverly faked intelligence.

Maybe that’ll change. Maybe generative AI will let NPCs have realistic conversations one day. Maybe we’ll see procedural personalities that grow and evolve over a full RPG campaign. But right now? The smartest AI in games is still the one that’s best at pretending.

Because in the end, video game AI isn’t about being right – it’s about being fun.